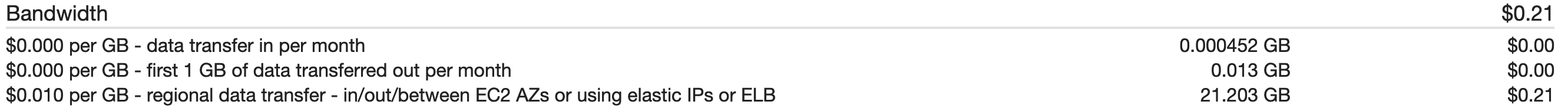

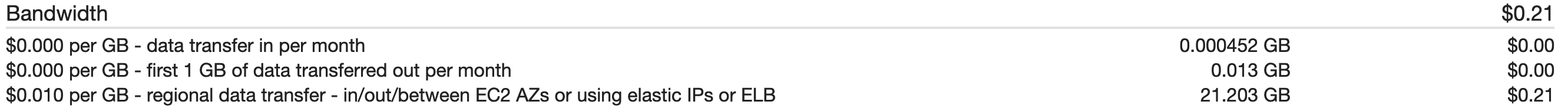

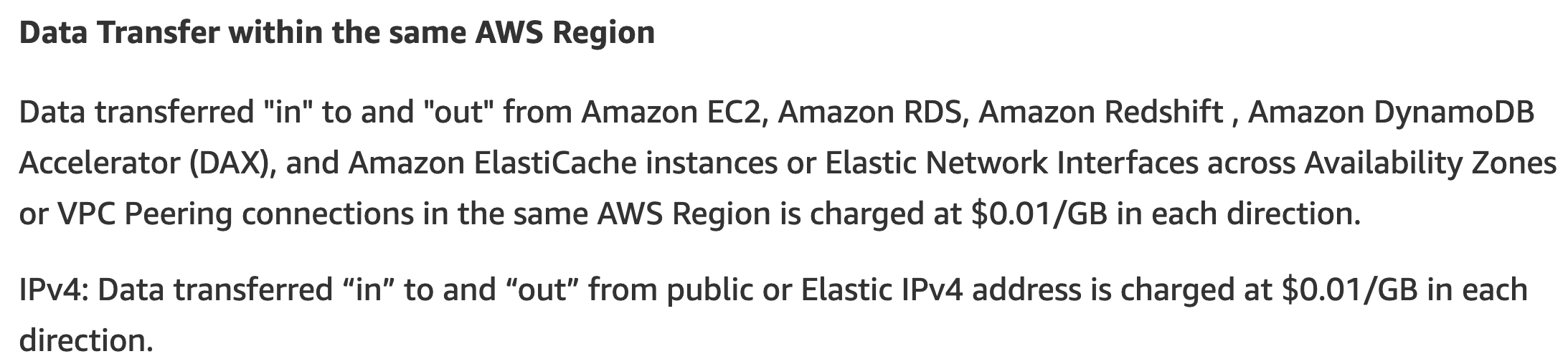

Recently I saw an intriguing article by Corey Quinn of lastweekinaws.com. His finding was that although AWS lists cross AZ traffic as costing $0.10 / GB on their documentation, cross AZ traffic actually costs $0.20 / GB; they double charge – charging you for data going out of an AZ and again for going into the other AZ.

This pricing is the same as cross region traffic – $0.20 / GB !

When I read this, it was hard to believe! For the last couple of years, I’ve always thought that cross AZ traffic is cheaper than cross region. Many of my co-workers believed the same as well.

I just had to recreate the cross AZ tests in the post to convince my self.

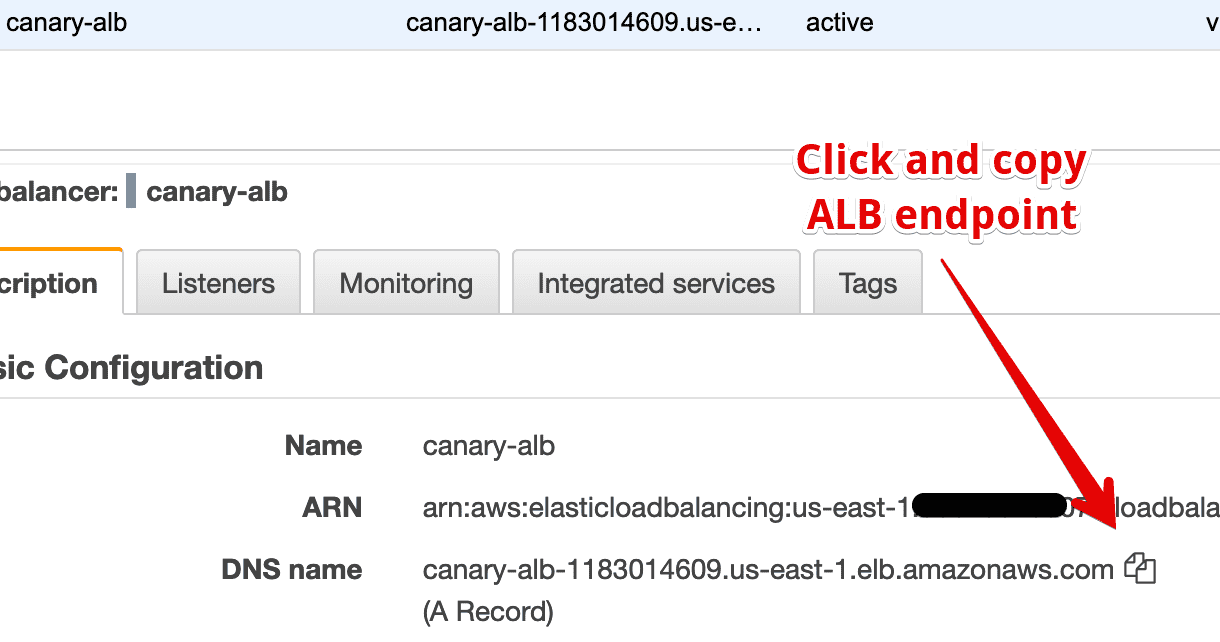

The test is simple as Corey was kind enough to detail the steps. I’ve added some additional details below to make it even easier to recreate.

I chose a region where I had absolutely nothing running in it to factor out any external factors.

The Bill

Per the test, I sent 10GB of traffic from an EC2 in us-west-2a to another in us-west-2b. Once the bill came in, I was charged for 20GB of data. So every 1GB transferred counted as 2.

Talking to AWS Support

After reaching out to AWS, they confirmed the results I saw. While the docs do not expliclity state that cross AZ costs are “double charged”, it does state that data “is charged at $0.01/GB each direction.”

Reducing cross AZ data costs

As most organizations today deploy their services across multiple AZs for high availability, it’s difficult to reduce cross AZ data without the right architecture.

Here are two concepts being used today to combat rising cloud costs (note: implementing either is not easy!)

Service mesh (Envoy, Istio, etc.)

By adopting service mesh architecture, it’s possible to force service to service communication to be within the same AZ.

For example, if a service A container is running in us-east-1a, a service mesh sidecar container running alongside it can ensure all requests goto services also running in 1a.

Implementing this with Envoy can be done through the zone aware routing feature or by using the Endpoint Discovery Service to dynamically send instances of your service endpoints (IP addresses) that are in the same AZ.

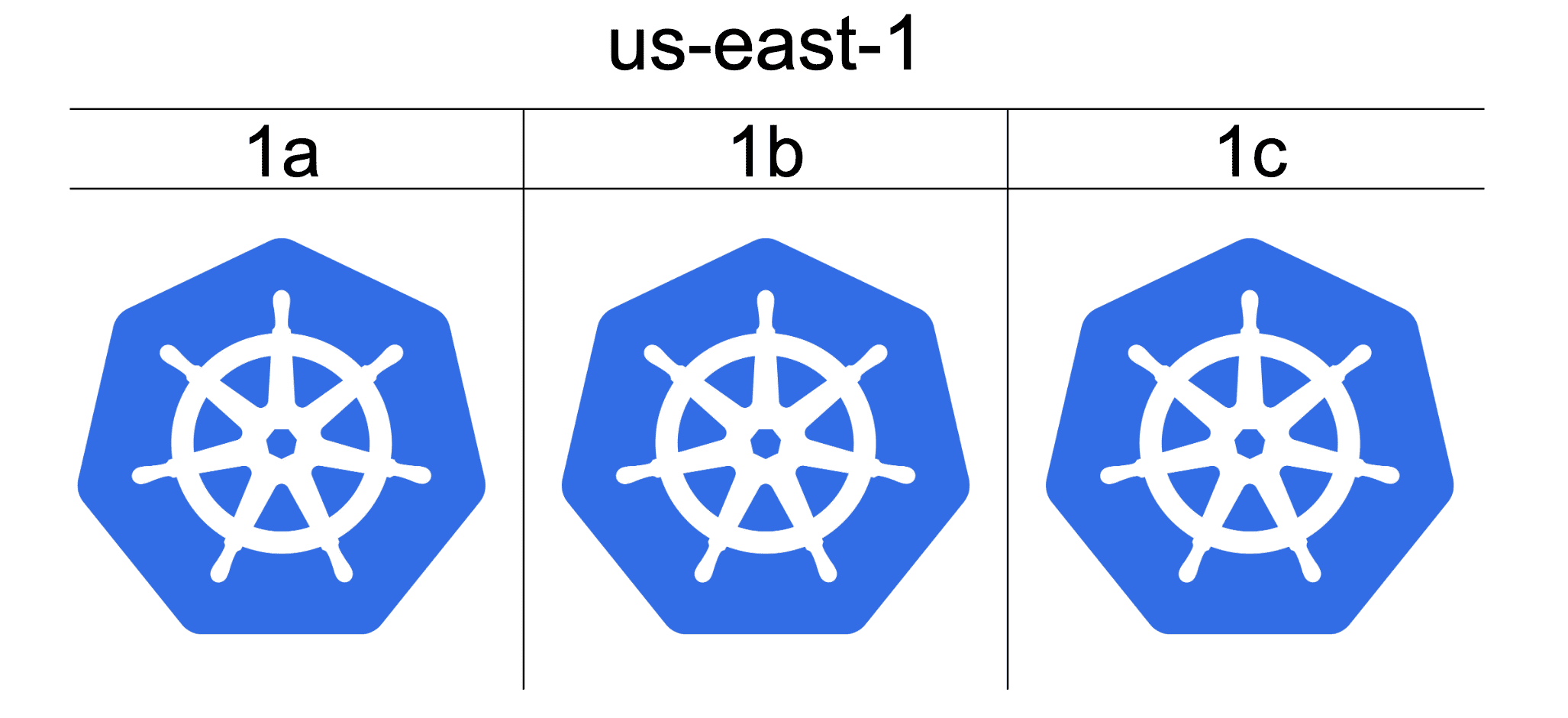

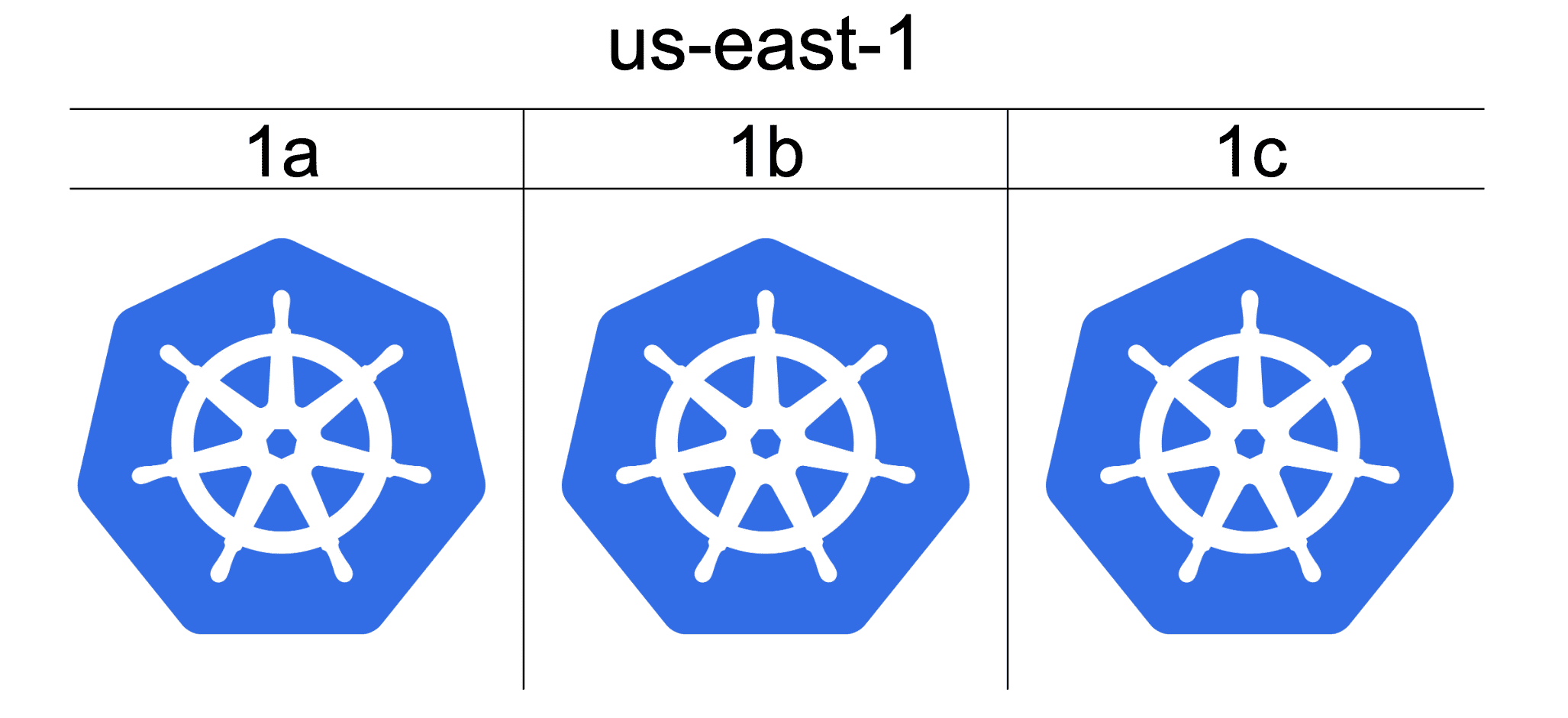

Cluster per AZ

This is a model being adopted by companies at scale like Tinder, Lyft and Reddit. They’re making it possible by using Kubernetes. The idea here is to have a production cluster per AZ, where each cluster has a complete replica of all your services – each cluster is an independent copy.

All communication within each cluster is done in the same AZ as the traffic is pinned inside.

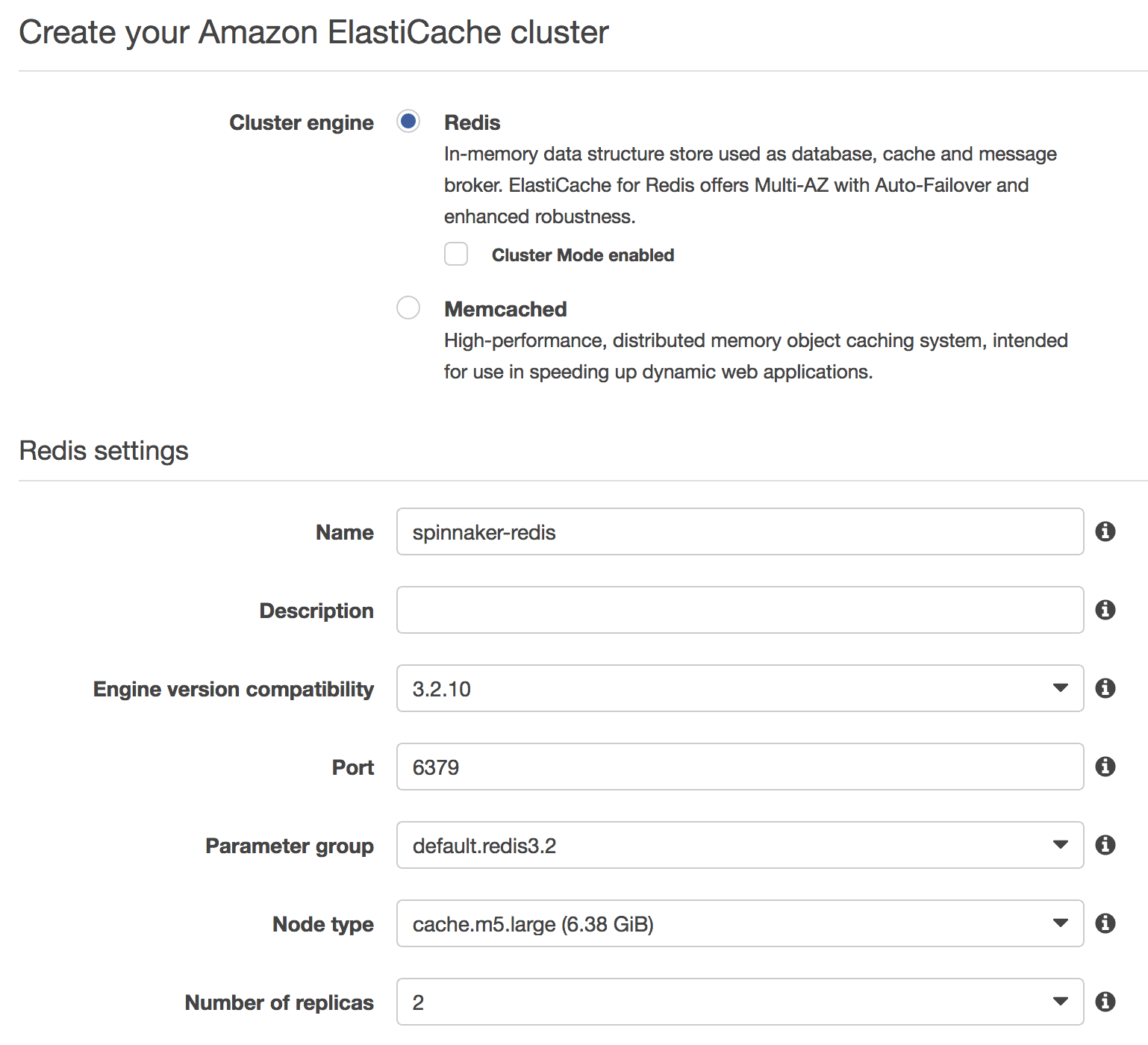

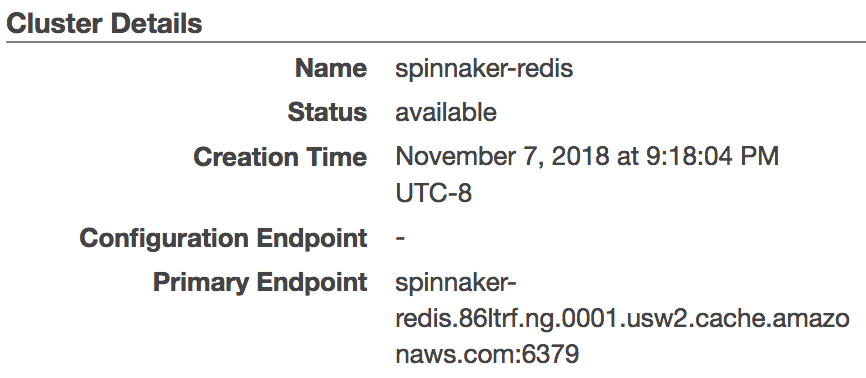

Of course, any shared data stores must be located outside the clusters. Managed data stores such as S3, DynamoDB and ElastiCache make a good fit.